Posts posted by Al Capone™

-

-

We think all-in-one computers are underperforming brethren of fully-fledged 'classic' desktop workstations. But Alafia AI, a startup specializing in modern media imaging appliances, will prove us wrong. The company's all-in-one Alafia Aivas SuperWorkstation for medical imaging packs a 128-core Ampere Altra processor and two Nvidia RTX professional graphics cards (via Joe Speed).

The machine is indeed quite powerful. Equipped with a 4K touch-sensitive display with up to 360 nits brightness, the workstation packs Ampere's Altra 128-core processor running at 3.0 GHz, two Nvidia RTX graphics cards with up to 28,416 cores (though the configuration shows Nvidia's RTX 4000 and RTX A3000 graphics cards), 2TB of DDR4 memory, and an up to 8TB solid-state drive.

"Alafia Ai is making 128 core medical imaging AI developer workstations with 4K rotating display, Ampere Altra Dev Kit 128c 7 built-in," wrote Joe Speed, the head of Ampere's edge computing division. "When asked 'Why Ampere,' Alafia said medical imaging tools are CPU intensive, and clinicians will run many apps at the same time."

Alafia AI calls its system a purpose-built clinical research appliance, to a large degree, because not many applications support both Arm-based Ampere Altra processors and Nvidia's inference capabilities. The company aims to ship the hardware in Q2 2024, expects massively parallel compute application integration in the third quarter, and then projects ecosystem device integration in Q4 2024.

For now, the machine is mainly aimed at software developers. Still, institutions that can tailor their applications for the Arm CPU architecture can buy and deploy them if they find performance and capabilities suitable for their needs. Alafia AI has a Github site that links to programs on its platforms. Meanwhile, the company clearly states that those who deploy the workstations in the field are responsible for the results that they produce.

"You are responsible for instituting human review as part of any use of Alafia Ai, Inc. products or services, including in association with any third-party product intended to inform clinical decision-making," a statement on the Alafia AI website reads. "The Alafia Ai, Inc. workstation, servers and/or products and services should only be used in patient care or clinical scenarios after review by trained medical professionals applying sound medical judgment."

-

-

Samsung Galaxy M55 5G is confirmed to launch in India soon alongside the Galaxy M15 5G. The phones have been teased to launch in the country previously and their Amazon availability was also confirmed. Now the India launch date of the smartphones has been announced. Alongside, Samsung has also revealed the colour options and a few key features of the handset including chipset, camera, display, battery and charging details. Notably, the phones were recently unveiled in select global markets.

The Amazon microsites for both the Galaxy M55 5G and the Galaxy M15 5G confirm that the phones will launch in India on April 8 at 12pm IST. The pages also reveal several key features of the handsets. The Galaxy M55 5G will be offered in the country in Denim Black and Light Green shades, while the Galaxy M15 5G is confirmed to come in three colour options - Blue Topaz, Celestine Blue, and Stone Grey.

The Indian variant of the Galaxy M55 5G is set to come with Qualcomm's Snapdragon 7 Gen 1 SoC, a 6.7-inch full-HD+ Super AMOLED Plus display with a 120Hz refresh rate, and 1,000 nits of peak brightness level. It will be equipped with Vision Booster technology that is claimed to help users see the screen clearly even under bright sunlight. The phone will be backed by a 5,000mAh battery with support for 45W wired fast charging.

For optics, the Galaxy M55 5G will carry a triple rear camera unit including a 50-megapixel primary sensor with optical image stabilisation (OIS). The phone is confirmed to sport a 50-megapixel front camera sensor for selfies and video calls. It will have a Nightography feature and AI-backed tools like Image Clipper and Object Eraser.

The Galaxy M15 5G, on the other hand, will have a MediaTek Dimensity 6100+ chipset and feature a 6.5-inch Super AMOLED display. It will also sport a 50-megapixel triple rear camera unit. The handset will be backed by a 6,000mAh battery that is claimed to offer a battery life of up to two days.

Both Galaxy M55 5G and Galaxy M15 5G models are also confirmed to be equipped with Samsung's Knox Security, while the former will also come with the Samsung Wallet feature.

An earlier leak suggested that the Galaxy M55 5G will start in India at Rs. 26,999 for the 8GB + 128GB option, while the 8GB + 256GB and 12GB + 256GB variants could be priced at Rs. 29,999 and Rs. 32,999, respectively. Meanwhile, the Galaxy M15 5G has been tipped to cost Rs.13,499 for the 4GB + 128GB option and Rs. 14,999 for the 6GB + 128GB variant.

-

https://techxplore.com/news/2024-03-method-optimizes-autonomous-ship.html

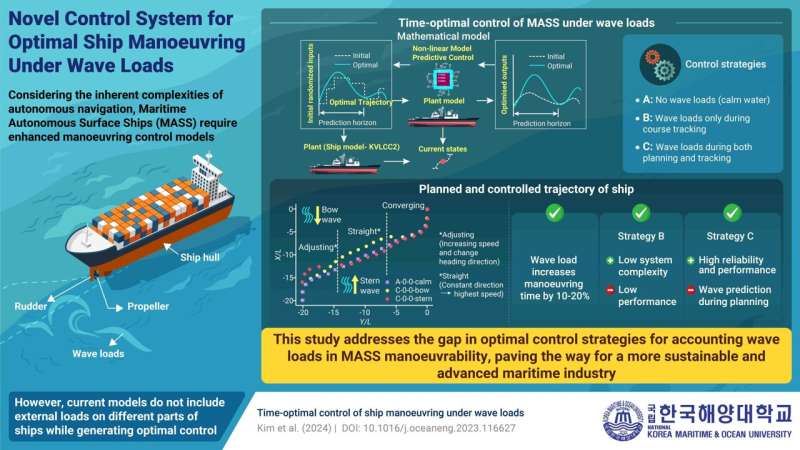

The study of ship maneuvering at sea has long been the central focus of the shipping industry. With the rapid advancements in remote control, communication technologies, and artificial intelligence, the concept of Maritime Autonomous Surface Ships (MASS) has emerged as a promising solution for autonomous marine navigation. This shift highlights the growing need for optimal control models for autonomous ship maneuvering.

Designing a control system for time-efficient ship maneuvering is one of the most difficult challenges in autonomous ship control. While many studies have investigated this problem and proposed various control methods, including Model Predictive Control (MPC), most have focused on control in calm waters, which do not represent real operating conditions.

At sea, ships are continuously affected by different external loads, with loads from sea waves being the most significant factor affecting maneuvering performance.

To address this gap, a team of researchers, led by Assistant Professor Daejeong Kim from the Division of Navigation Convergence Studies at the Korea Maritime & Ocean University in South Korea, designed a novel time-optimal control method for MASS. "Our control model accounts for various forces that act on the ship, enabling MASS to navigate better and track targets in dynamic sea conditions," says Dr. Kim. Their study was published in Ocean Engineering.

At the heart of this innovative control system is a comprehensive mathematical ship model that accounts for various forces in the sea, including wave loads, acting on key parts of a ship such as the hull, propellers, and rudders. However, this model cannot be directly used to optimize the maneuvering time.

For this, the researchers developed a novel time optimization model that transforms the mathematical model from a temporal formulation to a spatial one. This successfully optimizes the maneuvering time.

These two models were integrated into a nonlinear MPC controller to achieve time-optimal control. They tested this controller by simulating a real ship model navigating in the sea with different wave loads.

Additionally, for effective course planning and tracking, researchers proposed three control strategies: Strategy A excluded wave loads during both the planning and tracking stages, serving as a reference; Strategy B included wave loads only in the planning stage, and Strategy C included wave loads in both stages, measuring their influence on both propulsion and steering.

Experiments revealed that wave loads increased the expected maneuvering time on both strategies B and C. Comparing the two strategies, the researchers found strategy B to be simpler with lower performance than strategy C, with the latter being more reliable. However, strategy C places an additional burden on the controller by including wave load prediction in the planning stage.

"Our method enhances the efficiency and safety of autonomous vessel operations and potentially reduces shipping costs and carbon emissions, benefiting various sectors of the economy," remarks Dr. Kim, highlighting the potential of this study. "Overall, our study addresses a critical gap in autonomous ship maneuvering which could contribute to the development of a more technologically advanced maritime industry."

-

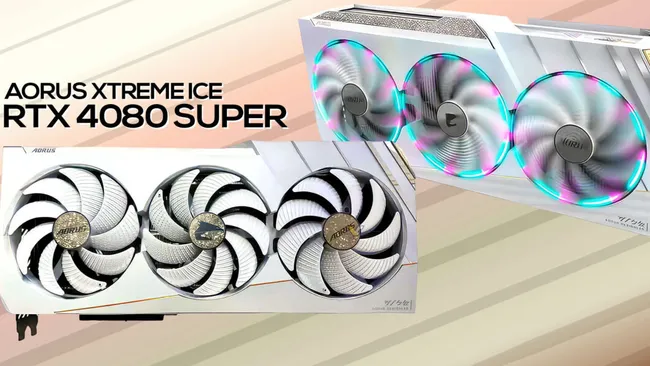

According to a Gigabyte Vietnam Facebook posting, the Gigabyte Xtreme Ice Aorus Xtreme RTX 4080 Super photos with "Bionic shark fans" spotted yesterday are in fact legitimate, and point to an imminent-but-unspecified limited-run release. The white take on the previously black-and-gold Aorus Xtreme design looks pretty grandiose in its own right, and the GPU is one of the best graphics cards.

Similar to Gigabyte's other Aorus Ice GPUs, the Xtreme Ice is a white, air-cooled GPU. However, this is actually a break away from the Aorus Xtreme tradition of WaterForce GPU coolers. That could be why these will apparently be limited to only 300 GPUs, according to VideoCardz — sold in bundles that include everything you'd need, except for a case, CPU, and SSD.

We reached out to Gigabyte for comment, who told us: "The XTREME ICE is a limited-edition series that will be launching in the near future. Availability is expected to be very tight. The MB and GPUs will include an exclusive gold serial plaque."

Compared to the stock RTX 4080 Super, the Gigabyte Aorus Xtreme Ice differences include a 150 MHz overclock to 2.7 GHz, RGB lighting, and a built-in screen. The massive triple-fan, triple-slot cooler design will appeal to users who have plenty of room in their cases, and who value cooler temperatures.

Gigabyte boasts in its announcement slides that its 2.7 GHz clock is an unprecedented factory OC of the 4080 Super. Additionally, they estimate 885 AI TOPs performance compared to the 836 AI TOPs of the stock 4080 Super, which is a nice bonus for those who wish to leverage AI workloads. The cards will feature dual BIOS functionality and come with a 4-year warranty period.

The Gigabyte Aorus Xtreme Ice RTX 4080 Super will be available in a limited-run bundle, with an unknown launch date. The GPUs will come bundled with other white Aorus PC components, specifically a 1000W power supply, an Intel Z790 motherboard, and a 360mm liquid cooler. Curiously, Gigabyte isn't including one of its Aorus Gen5 SSDs.

The total bundle will likely cost quite a bit, considering its components. The GPU on its own starts at $999, and overclocked variants can go for $1,300 or more. We'll have to wait and see, but $2,000 (give or take) for the entire bundle wouldn't be shocking. Rumors suggest the bundle could go on sale within the next few weeks.

-

@ayaanali Has been removed from our team. Reason: Retired.

@Dean Ambrose™ Has been remomed from our team. Reason: Lack of interest , last post (28 February)

-

2

2

-

-

@X A V I ™ Has been removed of our team Reason: Absent

-

3

3

-

-

Pro.

-

1

1

-

-

-

Rejected.

-

1

1

-

-

Voted ❤️

-

1

1

-

-

-

-

-

Poco's Global executive David Liu recently teased the arrival of a new Poco F series phone without confirming the exact moniker. It is tipped to be the Poco F6. The Xiaomi sub-brand is yet to announce the launch date of the new F series device but ahead of it, its specifications have emerged online. It is anticipated to run on the newly released Snapdragon 8s Gen 3 SoC. It is said to feature a 50-megapixel Sony IMX882 sensor. The Poco F6 has been speculated to debut as a rebranded version of the unannounced Redmi Note 13 Turbo.

Android Headlines, citing HyperOS source code, claims that the Poco F6 will run on the Snapdragon 8s Gen 3 SoC. The Poco phone codenamed “peridot” will be the second handset in the world to run on the latest Qualcomm chipset. Xiaomi launched the Civi 4 Pro as the first phone with a Snapdragon 8s Gen 3 chipset earlier this month.

Further, the report states that the Poco F6 will feature a Sony IMX882 50-megapixel primary camera. The same sensor is used in the outgoing Realme 12 Pro 5G and the iQoo Z9 5G. The camera setup will reportedly include an 8-megapixel Sony IMX355 ultra wide-angle sensor as well. It is said to have the internal model number “N16T“.

Poco has reportedly used display panels from TCL and Tianma on the Poco F6. However, there is no word on the display specifications yet.

Poco F6 has been in the rumour mill for quite some time. Poco Global executive David Liu (@DavidBlueLS) recently seemingly confirmed the existence of the device. It is anticipated to go official between April and May alongside the Poco F6 Pro. It was earlier rumoured to come as a rebranded version of the unannounced Redmi Note 13 Turbo.

The Poco F6 is believed to come with upgrades over last year's Poco F5 5G, which was unveiled in May last year with a price tag of Rs. 29,999 for the 8GB RAM + 256GB storage variant.

-

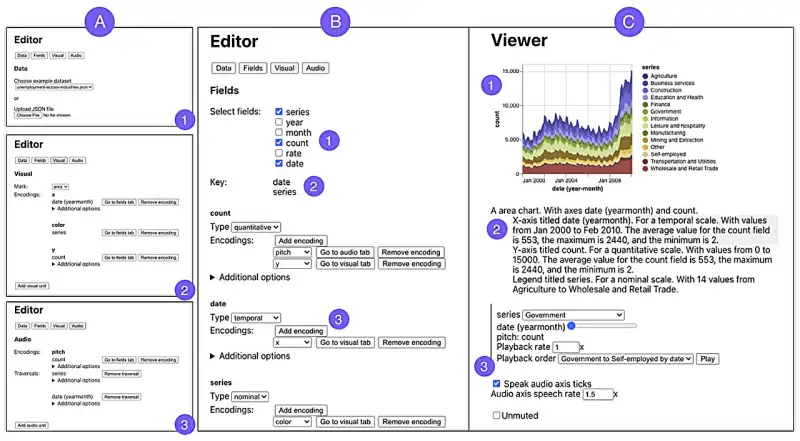

A growing number of tools enable users to make online data representations, like charts, that are accessible for people who are blind or have low vision. However, most tools require an existing visual chart that can then be converted into an accessible format.

This creates barriers that prevent blind and low-vision users from building their own custom data representations, and it can limit their ability to explore and analyze important information.

A team of researchers from MIT and University College London (UCL) wants to change the way people think about accessible data representations.

They created a software system called Umwelt (which means "environment" in German) that can enable blind and low-vision users to build customized, multimodal data representations without needing an initial visual chart.

Umwelt, an authoring environment designed for screen-reader users, incorporates an editor that allows someone to upload a dataset and create a customized representation, such as a scatterplot, that can include three modalities: visualization, textual description, and sonification. Sonification involves converting data into nonspeech audio.

The system, which can represent a variety of data types, includes a viewer that enables a blind or low-vision user to interactively explore a data representation, seamlessly switching between each modality to interact with data in a different way.

The researchers conducted a study with five expert screen-reader users who found Umwelt to be useful and easy to learn. In addition to offering an interface that empowered them to create data representations—something they said was sorely lacking—the users said Umwelt could facilitate communication between people who rely on different senses.

"We have to remember that blind and low-vision people aren't isolated. They exist in these contexts where they want to talk to other people about data," says Jonathan Zong, an electrical engineering and computer science (EECS) graduate student and lead author of a paper introducing Umwelt.

"I am hopeful that Umwelt helps shift the way that researchers think about accessible data analysis. Enabling the full participation of blind and low-vision people in data analysis involves seeing visualization as just one piece of this bigger, multisensory puzzle."

Joining Zong on the paper are fellow EECS graduate students Isabella Pedraza Pineros and Mengzhu "Katie" Chen; Daniel Hajas, a UCL researcher who works with the Global Disability Innovation Hub; and senior author Arvind Satyanarayan, associate professor of computer science at MIT who leads the Visualization Group in the Computer Science and Artificial Intelligence Laboratory.

The paper will be presented at the ACM Conference on Human Factors in Computing (CHI 2024), HELD May 11–16 in Honolulu. The findings are published on the arXiv preprint server.

De-centering visualization

The researchers previously developed interactive interfaces that provide a richer experience for screen reader users as they explore accessible data representations. Through that work, they realized most tools for creating such representations involve converting existing visual charts.

Aiming to decenter visual representations in data analysis, Zong and Hajas, who lost his sight at age 16, began co-designing Umwelt more than a year ago.

At the outset, they realized they would need to rethink how to represent the same data using visual, auditory, and textual forms.

"We had to put a common denominator behind the three modalities. By creating this new language for representations, and making the output and input accessible, the whole is greater than the sum of its parts," says Hajas.

To build Umwelt, they first considered what is unique about the way people use each sense.

For instance, a sighted user can see the overall pattern of a scatterplot and, at the same time, move their eyes to focus on different data points. But for someone listening to a sonification, the experience is linear since data are converted into tones that must be played back one at a time.

"If you are only thinking about directly translating visual features into nonvisual features, then you miss out on the unique strengths and weaknesses of each modality," Zong adds.

"If you are only thinking about directly translating visual features into nonvisual features, then you miss out on the unique strengths and weaknesses of each modality," Zong adds.

They designed Umwelt to offer flexibility, enabling a user to switch between modalities easily when one would better suit their task at a given time.

To use the editor, one uploads a dataset to Umwelt, which employs heuristics to automatically creates default representations in each modality.

If the dataset contains stock prices for companies, Umwelt might generate a multiseries line chart, a textual structure that groups data by ticker symbol and date, and a sonification that uses tone length to represent the price for each date, arranged by ticker symbol.

The default heuristics are intended to help the user get started.

"In any kind of creative tool, you have a blank-slate effect where it is hard to know how to begin. That is compounded in a multimodal tool because you have to specify things in three different representations," Zong says.

The editor links interactions across modalities, so if a user changes the textual description, that information is adjusted in the corresponding sonification. Someone could utilize the editor to build a multimodal representation, switch to the viewer for an initial exploration, then return to the editor to make adjustments.

Helping users communicate about data

To test Umwelt, they created a diverse set of multimodal representations, from scatterplots to multiview charts, to ensure the system could effectively represent different data types. Then they put the tool in the hands of five expert screen reader users.

Study participants mostly found Umwelt to be useful for creating, exploring, and discussing data representations. One user said Umwelt was like an "enabler" that decreased the time it took them to analyze data. The users agreed that Umwelt could help them communicate about data more easily with sighted colleagues.

"What stands out about Umwelt is its core philosophy of de-emphasizing the visual in favor of a balanced, multisensory data experience. Often, nonvisual data representations are relegated to the status of secondary considerations, mere add-ons to their visual counterparts. However, visualization is merely one aspect of data representation.

"I appreciate their efforts in shifting this perception and embracing a more inclusive approach to data science," says JooYoung Seo, an assistant professor in the School of Information Sciences at the University of Illinois at Urbana-Champagne, who was not involved with this work.

Moving forward, the researchers plan to create an open-source version of Umwelt that others can build upon. They also want to integrate tactile sensing into the software system as an additional modality, enabling the use of tools like refreshable tactile graphics displays.

"In addition to its impact on end users, I am hoping that Umwelt can be a platform for asking scientific questions around how people use and perceive multimodal representations, and how we can improve the design beyond this initial step," says Zong.

-

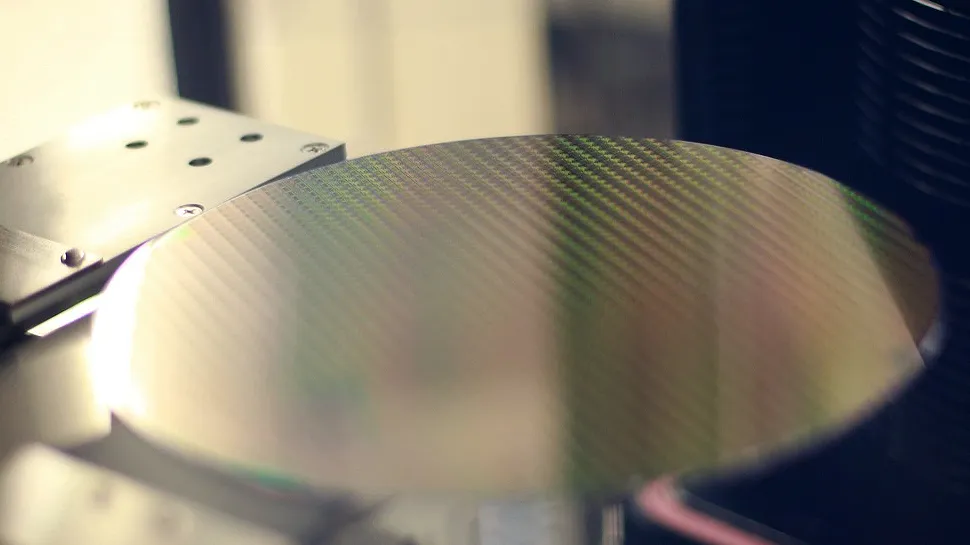

Chinese President Xi Jinping told Dutch Prime Minister Mark Rutte at a recent meeting that efforts to limit China's access to technological advancements would not deter the nation's progress. This discussion came after the Netherlands imposed export controls on advanced chipmaking tools in alignment with U.S. efforts to restrict China's access to advanced technology out of concern for national security, according to a report by the Associated Press.

"The Chinese people also have the right to legitimate development, and no force can stop the pace of China's scientific and technological development and progress," Xi said.

The Netherlands' decision to enforce export licensing on ASML's lithography equipment — which can be used to make logic chips using 14nm and more advanced process technology — is a big deal for China's semiconductor makers such as SMIC and YMTC. SMIC recently partnered with Huawei to produce 7nm-class smartphone processor using ASML's advanced deep ultraviolet (DUV) litho tools and the two companies are reportedly working on making 5nm-class chips, using these machines.

The ongoing tension between the U.S. and China over technology access have led China to accuse the U.S. of hindering its economic development. The U.S. does not want to China to have access to high-performance processors that could be used for artificial intelligence and high-performance computing applications, as powerful supercomputers could be used to develop China's military capabilities, as well as weapons of mass destruction. This is why American companies such as AMD, Intel, and Nvidia can no longer sell their latest products to Chinese entities without an export license from the U.S. government.

The U.S. does not want China to be able to produce its own AI and HPC processors for the same reasons. To ensure this, the U.S. has restricted American companies from selling advanced wafer fab equipment (WFE) to China-based entities, and has managed to persuade Japan, the Netherlands, and Taiwan to do the same — which has obviously upset China.

-

-

-

-

forms were already established by then. Now, the po[CENSORED]r messaging platform is tipped to add international payments with a limit of up to three months in order to boost the user base of its financial service.

The information about the feature was shared by tipster @AssembleDebug who said in a post on X (formerly known as Twitter), “International Payments on WhatsApp through UPI for Indian users. This is currently not available for users. But WhatsApp might be working on it as I couldn't find anything on Google about it.” The tipster also shared screenshots of the feature but did not reveal which beta version added it.

In the screenshot, a new option can be seen in the Payments menu underneath the Forgot UPI PIN option. The tipped feature is labelled International payments and when clicked, it opens a separate screen where users can choose the start and end dates for the feature and turn it on. As per the screenshot, users will be required to enter the UPI PIN to turn on the feature.

International payments allow users with an Indian bank account to send money to select international merchants and complete transactions. The feature will only work in countries where banks have enabled international UPI services. In India, international payment via UPI automatically expires, after which it needs to be activated manually again. As per the tipster, this period in WhatsApp could be three months. In contrast, Google Pay offers a transaction period of seven days. Notably, Google Pay, PhonePe, and some other major players in the UPI space already offer the service.

-

https://techxplore.com/news/2024-03-food-safety-stage-classification-ingredients.html

Research published in the International Journal of Reasoning-based Intelligent Systems discusses a new approach to the identification of ingredients in photographs of food. The work will be useful in our moving forward on food safety endeavors.

Sharanabasappa A. Madival and Shivkumar S. Jawaligi of Sharnbasva University in Kalburgi, Karanataka, India, used a two-stage process of feature extraction and classification to improve on previous approaches to ingredient identification in this context.

The team explain that their approach used scale-invariant feature transform (SIFT) and convolutional neural network (CNN)-based deep features to extract both image and textual features. Once extracted, the features are fed into a hybrid classifier, which merges neural network (NN) and long short-term memory (LSTM) models.

The team explains that precision of their model can be further refined through the application of the Chebyshev map evaluated teamwork optimization (CME-TWO) algorithm. All of this leads to an accurate identification of the ingredients.

Food management in a globalized world is critical to worldwide supply chains, to food security, traceability and detection of fake food and food fraud. We, as consumers and diners, need to know that the ingredients in the food we eat, especially in the context of diverse dietary preferences and health considerations, are valid.

The team found that their approach works more effectively than current ingredient identification systems. Specifically, they demonstrated that the HC + CME-TWO model performs the best by a large margin, which can thus be taken as indicating a significant advancement in this area. It is the use of a hybrid classifier and the fine-tuning of weightings using the CME-TWO algorithm that leads to the marked improvement in accuracy and reliability. Moreover, the team says that there is still room for improvement in terms of shortening processing times through optimization.

The work focuses on food safety but could be used to address the challenges facing regulators and others attempting to ensure food authenticity, especially among high-value foods.

-

Intel announced two new extensions to its AI PC Acceleration Program in Taipei, Taiwan, with a new PC Developer Program that’s designed to attract smaller Independent Software Vendors (ISVs) and even individual developers, and an Independent Hardware Vendors (IHV) program that assists partners developing AI-centric hardware. Intel is also launching a new Core Ultra Meteor Lake NUC development kit, and introducing Microsoft’s new definition of just what constitutes an AI PC. We were given a glimpse of how AI PCs will deliver better battery life, higher performance, and new features.

Intel launched its AI Developer Program in October of last year, but it's kicking off its new programs here at a developer event in Taipei that includes hands-on lab time with the new dev kits. The program aims to arm developers with the tools needed to develop new AI applications and hardware, which we’ll cover more in-depth below.

Intel plans to deliver over 100 million PCs with AI accelerators by the end of 2025. The company is already engaging with 100+ AI ISVs for PC platforms and plans to have over 300 AI-accelerated applications in the market by the end of 2024. To further those efforts, Intel is planning a series of local developer events around the globe at key locations, like the recent summit it held in India. Intel plans to have up to ten more events this year as it works to build out the developer ecosystem.

The battle for control of the AI PC market will intensify over the coming years — Canalys predicts that 19% of PCs shipped in 2024 will be AI-capable, but that number will increase to 60% by 2027, highlighting a tremendous growth rate that isn’t lost on the big players in the industry. In fact, AMD recently held its own AI PC Innovation Summit in Beijing, China, to expand its own ecosystem. The battle for share in the expanding AI PC market begins with silicon that enables the features, but it ends with the developers that turn those capabilities into tangible software and hardware benefits for end users. Here’s how Intel is tackling the challenges.

The advent of AI presents tremendous opportunities to introduce new hardware and software features to the tried-and-true PC platform, but the definition of an AI PC has been a bit elusive. Numerous companies, including Intel, AMD, Apple, and soon Qualcomm with its X Elite chips, have developed silicon with purpose-built AI accelerators residing on-chip alongside the standard CPU and GPU cores. However, each has its own take on what constitutes an AI PC.Microsoft’s and Intel’s new co-developed definition states that an AI PC will come with a Neural Processing Unit (NPU), CPU, and GPU that support Microsoft’s Copilot and come with a physical Copilot key directly on the keyboard that replaces the second Windows key on the right side of the keyboard. Copilot is an AI chatbot powered by an LLM that is currently being rolled into newer versions of Windows 11. It is currently powered by cloud-based services, but the company reportedly plans to enable local processing to boost performance and responsiveness. This definition means that the existing Meteor Lake and Ryzen laptops that have shipped without a Copilot key actually don't meet Microsoft's criteria, though we expect Microsoft's new definition to spur broader adoption of the Copilot key.

While Intel and Microsoft are now promoting this jointly developed definition of an AI PC, Intel itself has a simpler definition that says it requires a CPU, GPU, and NPU, each with its own AI-specific acceleration capabilities. Intel envisions shuffling AI workloads between these three units based on the type of compute needed, with the NPU providing exceptional power efficiency for lower-intensity AI workloads like photo, audio, and video processing while delivering faster response times than cloud-based services, thus boosting battery life and performance while ensuring data privacy by keeping data on the local machine. This also frees the CPU and GPU for other tasks. The GPU and CPU will step in for heavier AI tasks, a must as having multiple AI models running concurrently could overwhelm the comparatively limited NPU. If needed, the NPU and GPU can even run an LLM in tandem.

AI models also have a voracious appetite for memory capacity and speed, with the former enabling larger, more accurate models while the latter delivers more performance. AI models come in all shapes and sizes, and Intel says that memory capacity will become a key challenge when running LLMs, with 16GB being required in some workloads, and even 32GB may be necessary depending on the types of models used.

Naturally, that could add quite a bit of cost, particularly in laptops, but Microsoft has stopped short of defining a minimum memory requirement yet. Naturally, it will continue to work through different configuration options with OEMs. The goalposts will be different for consumer-class hardware as opposed to workstations and enterprise gear, but we should expect to see more DRAM on entry-level AI PCs than the standard fare — we may finally bid adieu to 8GB laptops.

Intel says that AI will enable a host of new features, but many of the new use cases are undefined because we remain in the early days of AI adoption. Chatbots and personal assistants trained locally on users' data are a logical starting point, and Nvidia's Chat with RTX and AMD's chatbot alternative are already out there, but AI models running on the NPU can also make better use of the existing hardware and sensors present on the PC.For instance, coupling gaze detection with power-saving features in OLED panels can enable lower refresh rates when acceptable, or switch the screen off when the user leaves the PC, thus saving battery life. Video conferencing also benefits from techniques like background segmentation, and moving that workload from the CPU to the NPU can save up to 2.5W. That doesn’t sound like much, but Intel says it can result in an extra hour of battery life in some cases.

Other uses include eye gaze correction, auto-framing, background blurring, background noise reduction, audio transcription, and meeting notes, some of which are being built to run on the NPU with direct support from companies like Zoom, Webex, and Google Meet, among others. Companies are already working on coding assistants that learn from your own code base, and others are developing Retrieval-Augmented Generation (RAG) LLMs that can be trained on the users’ data, which is then used as a database to answer search queries, thus providing more specific and accurate information.

Other workloads include image generation along with audio and video editing, such as the features being worked into the Adobe Creative Cloud software suite. Security is also a big focus, with AI-powered anti-phishing software already in the works, for instance. Intel’s own engineers have also developed a sign-language-to-text application that uses video detection to translate sign language, showing that there are many unthought-of applications that can deliver incredible benefits to users.

The Core Ultra Meteor Lake Dev Kit

AI(Image credit: Intel)

The Intel dev kit consists of an ASUS NUC Pro 14 with a Core Ultra Meteor Lake processor, but Intel hasn’t shared the detailed specs yet. We do know that the systems will come in varying form factors. Every system will also come with a pre-loaded software stack, programming tools, compilers, and the drivers needed to get up and running.Installed tools include Cmake, Python, and Open Vino, among others. Intel also supports ONNX, DirectML, and WebNN, with more coming. Intel’s OpenVino model zoo currently has over 280 open-source and optimized pre-trained models. It also has 173 for ONNX and 150 models on Hugging Face, and the most po[CENSORED]r models have over 300,000 downloads per month.

Expanding the Ecosysteml)

Intel is already working with its top 100 ISVs, like Zoom, Adobe and Autodesk, to integrate AI acceleration into their applications. Now it wants to broaden its developer base to smaller software and hardware developers — even those who work independently.

To that end, Intel will provide developers with its new dev kit at the conferences it has scheduled around the globe, with the first round of developer kits being handed out here in Taipei. Intel will also make dev kits available for those who can’t attend the events, but it hasn’t yet started that part of the program due to varying restrictions in different countries and other logistical challenges.

These kits will be available at a subsidized cost, meaning Intel will provide a deep discount, but the company hasn’t shared details on pricing yet. There are also plans to give developers access to dev kits based on Intel’s future platforms.

Aside from providing hardware to the larger dev houses, Intel is also planning to seed dev kits to universities to engage with computer science departments. Intel has a knowledge center with training videos, documentation, collateral, and even sample code on its website to support the dev community.

Intel is engaging with Independent Hardware Vendors (IHVs) that will develop the next wave of devices for AI PCs. The company offers 24/7 access to Intel’s testing and process resources, along with early reference hardware, through its Open Labs initiative in the US, China, and Taiwan. Intel already has 100+ IHVs that have developed 200 components during the pilot phase.

ISVs and IHVs interested in joining Intel’s PC Acceleration Program can join via the webpage. We’re here at the event and will follow up with updates as needed.

-

¤ Nick: Inmortal

¤ Grado: slot

¤ Tag deseado: PH SQUAD Co-Led | The Drug

¤ Link de tus horas jugadas en GameTraker(Gametraker.rs) : https://www.gametracker.rs/player/135.125.249.129:27015/Inmortal/

.gif.f68ca7a4b71fb756e998aa16180d06c4.gif)

[Software]NASA noise prediction tool supports users in air taxi industry

in Software

Posted

https://techxplore.com/news/2024-04-nasa-noise-tool-users-air.html

Several air taxi companies are using a NASA-developed computer software tool to predict aircraft noise and aerodynamic performance. This tool allows manufacturers working in fields related to NASA's Advanced Air Mobility mission to see early in the aircraft development process how design elements like propellors or wings would perform. This saves the industry time and money when making potential design modifications.

This NASA computer code, called OVERFLOW, performs calculations to predict fluid flows such as air, and the pressures, forces, moments, and power requirements that come from the aircraft. Since these fluid flows contribute to aircraft noise, improved predictions can help engineers design quieter models.

Manufacturers can integrate the code with their own aircraft modeling programs to run different scenarios, quantifying performance and efficiency, and visually interpreting how the airflow behaves on and around the vehicle. These interpretations can come forward in a variety of colors representing these behaviors.